Reducing memory usage by removing module imports

Frappe broadly runs three types of Python processes in production:

- Web worker (Gunicorn)

- Background worker (RQ worker)

- Scheduler (Simple infinite loop to enqueue background jobs)

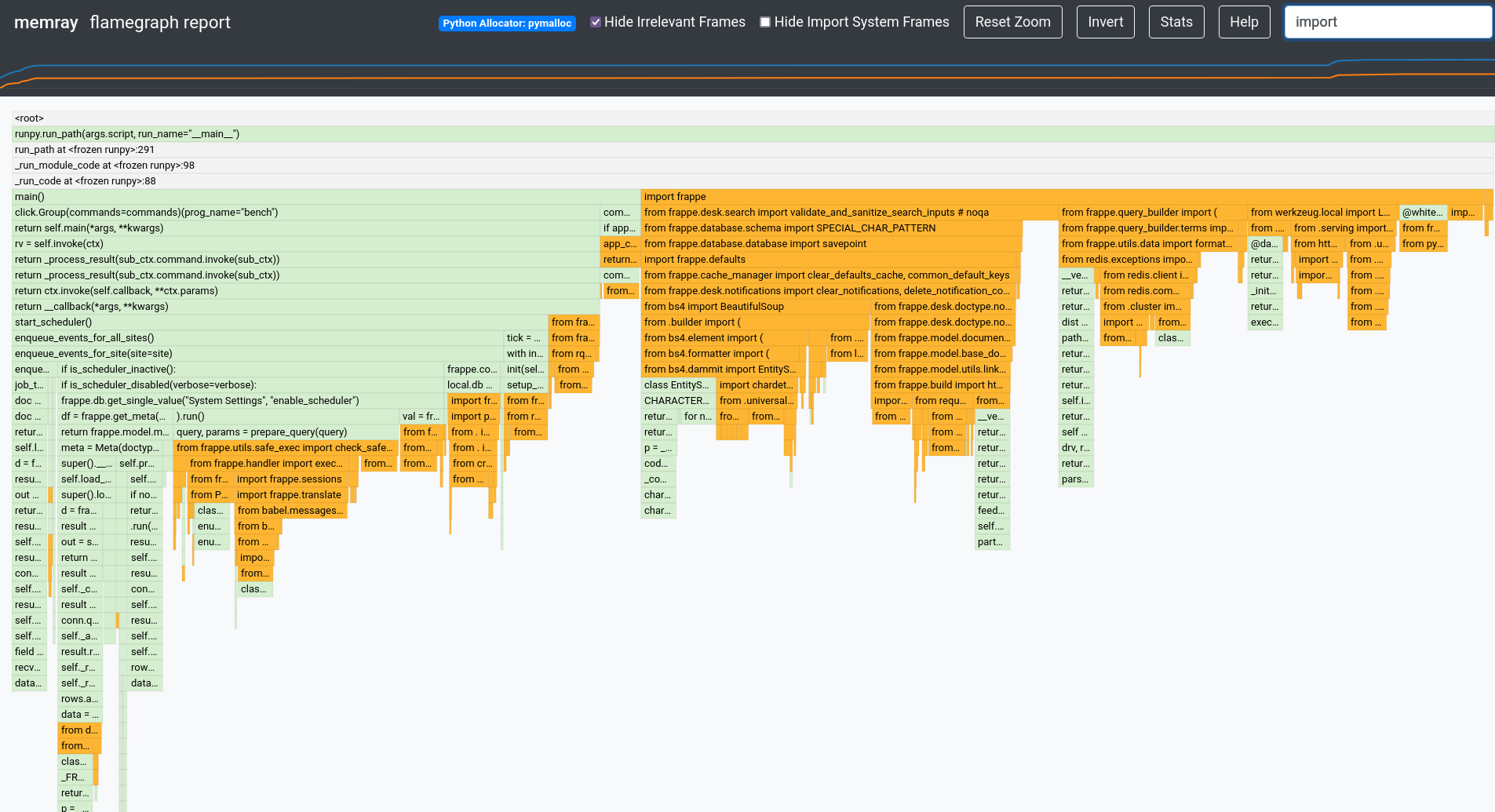

Since Frappe is a batteries-included framework, it does a lot of things out of the box, but not all those things need to be loaded in the memory all the time. The first thing we did was try to get the statistics on "what" consumes memory. In all three processes, most of the memory cost came from Python modules and 3rd party imports. We tried a bunch of tools to analyse this but ultimately used Memray to analyze memory usage. Memray is a sampling profiler which can be instrumented to capture memory usage from any Python process, even when it's already running.

We got a nice Flamegraph output from Memray which was enough to identify a lot of imported modules that didn't need to be imported by default. We started removing these imports and deferring them until they were needed. This resulted in an easy 40% reduction in heap memory usage with next to no costs. The two biggest wins were removing babel (a library that's only used for generating translation files) and beautifulsoup4 which was only used in a few actions, so wasn't required by default.

You can check the actual changes in these pull requests: #21467, #21473

Freezing generational garbage collector

Gunicorn uses pre-fork model to create multiple workers from a single master process, and since Python modules practically never change in production one would assume that there's little memory cost for forking the process because of Copy-On-Write(CoW) optimization present in operating systems.

This however isn't the case with Python by default. Python has two garbage collectors, one keeps track of references and another is generational which is used to collect garbage with cyclic references. Generational garbage collector stores the information of which generation a particular object is present in on the object itself, thus modifying the object and triggering CoW. Instagram Engineering had found this problem long before us and upstreamed their fix to CPython in Python 3.7.

The fix for us turned out to be just one line: import gc; gc.freeze() added in app.py to freeze the generational garbage collector before the process is forked. This change ALONE reduced memory usage by ~31% (in maxed out 24 Gunicorn worker configurations, sharing approximately 40MB of memory per worker).

Now that sharing imported modules was beneficial, we added some most common Python modules in preload list to automatically load them in the master process before it's forked. This change caused a further reduction of 5%.

This change doesn't help much with background workers yet as they are not forked from the same process but rather duplicated by Supervisor. However, v15 adds support for experimental RQ workerpool which can help reduce memory usage for a set of 8 workers by 60-80%.

If you want to know more about this you should read Instagram Engineering's blog post. You can check the actual changes in these pull requests: #21474, #21475

Changing background workers' configuration

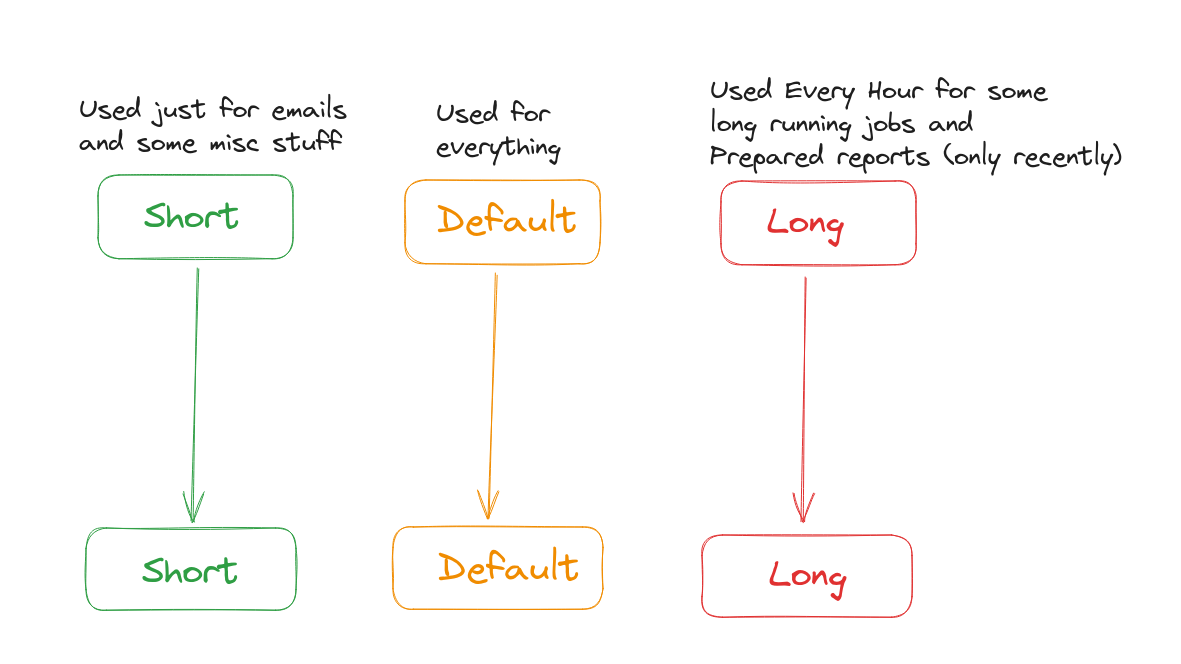

Frappe uses three background job queues and three types of workers to consume these queues. The design is optimized for two objectives:

- Short-running jobs triggered by users (like email, notification, webhooks) should be very responsive and be worked upon almost instantly.

- Long-running jobs should not block the entire setup regardless of how many long-running jobs are pending.

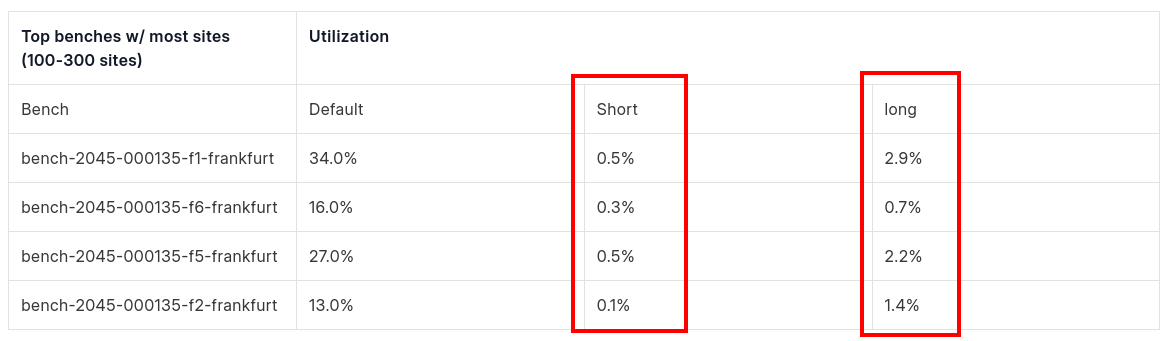

This configuration had survived so far, but when we started inspecting the utilization of this configuration, the cracks started to show up. Default workers were doing the most work, short workers had very little to do and long workers only worked sporadically, yet they all consumed equal amounts of memory when idle.

We made two major changes to the configuration:

- Merged short and default workers. This eliminated one entire type of workers.

- Made long workers consume short and default queues when the long queue was empty.

If you analyze this configuration, it still meets all the original objectives while achieving all these nicer properties:

- Reducing memory usage by 33%

- Increasing throughput of the entire system by increasing the usefulness of long workers.

- Dividing workload equally among the workers.

You can check the actual changes in these pull requests: #18995, #893

Key insights and learnings

- These improvements would not have been possible without awesome tools like Memray, py-spy, smem and Linux utils in general. Use the right tools for the job and if they don't exist then build them but don't go attacking these problems in the dark.

- All these changes were incrementally made with small pull requests. They all add up over time.

- The "core" architecture of your application isn't set in stone. Even small changes there can have big outcomes.

The Frappe Framework team set a goal this year of reducing resource usage by around 10% and we have achieved that goal in just one quarter. We aim to work on more such optimizations in the future. If you find this interesting and want to work on such problems, check out our careers page.

·

Hey Ankush

I really appreciate the work you've done to reduce the memory footprint of the Frappe Framework. As you mentioned, this is a major concern for many businesses, and the 10-35% reduction is a significant improvement. I'm particularly interested in the changes you made to the caching system. I've been working on a similar project myself, and I'm glad to see that you've found some effective ways to reduce memory usage. I'm also impressed by the fact that you were able to achieve these improvements without sacrificing performance. This is something that many developers struggle with, so it's great to see that you've been able to find a balance.

Overall, I think this is a great blog post and I'm excited to see what you do next. Keep up the good work!